Schedule MongoDB Backups with GitLab CI/CD

GitLab CI/CD let’s you schedule pipelines. And, in a way, I find it such a convenient way to manage my Internet crons.

One use of GitLab CI/CD schedules that I make is to backup MongoDB data. The pipeline, when run, SSHs into a server holding the MongoDB instance, runs mongodump and pipes it straight to s3cmd that then stores the dumped archived to an S3-esque bucket.

Here’s what the pipeline looks like in .gitlab-ci.yaml:

| |

Yes, there are just four lines in that script.

The first line TIMESTAMP=$(date +%Y%m%d%H%M%S) sets the TIMESTAMP variable. We use this to name our dumped archive.

The second line prepares the CI/CD environment for the SSH connection. It expects the SSH host keys and private key in the PRODUCTION_HOST_KEYS and PRODUCTION_SSH_PRIVATE_KEY environment variables.

The third line configures s3cmd. You need to keep a valid s3cmd configuration file in the PRODUCTION_S3CFG environment variable. It should allow you to upload objects to the bucket where you want to keep your MongoDB dump archives.

The fourth line is a mouthful. But it’s easy if you look at it in parts.

ssh -p $SSH_PORT root@$SSH_HOST: This SSHs into the remote server and runs the command that follows."mongodump --uri=mongodb://...": This is the command that is run on the remote server. It dumps MongoDB into an archive. The archive is streamed to stdout, which in turn is streamed through the SSH connection and piped into the next command.s3cmd -v put - s3://...: This takes the dumped archive, piped to its stdin, and uploads it to an S3-esque object storage bucket.

The Docker image you need for this should have the following tools/packages:

- ca-certificates

- gnupg2

- openssh-client

- s3cmd

Something like this will do:

| |

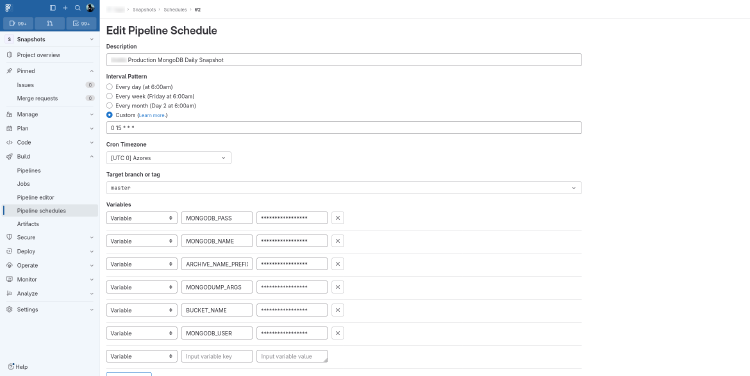

And that’s it. You now just need to set up a schedule for this pipeline from the GitLab web interface.

This post is 31st of my #100DaysToOffload challenge. Want to get involved? Find out more at 100daystooffload.com.

comments powered by Disqus